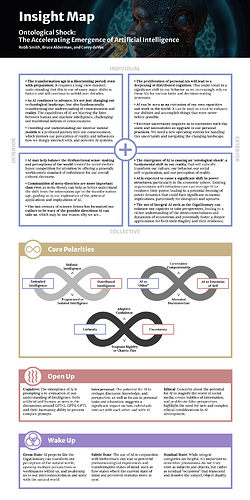

A new “Insight Map” I created for this discussion.

We do love our maps.

To the above Insight Map, I might add a few things:

in UL quad, to bullet point two: The capabilities of AI can also blur the lines between the generic and the specifically personal, challenging how we attend to individuality. (a current problem across institutions, e.g. banking, immigration/visas, medicine)

in LL quad, to bullet point three, after “science fiction,” I might add… as well as unfulfilled past promises of technology as near-panacea for human disconnection and isolation (Granted, the core polarities map speaks to problems encountered with social media, but I do think such a statement is relevant here too as part of the reason for “wariness.” Actually, there are a lot of reasons for why some people are wary, but these seem most fitting with this particular map.)

in UR quad, to bullet point one, after “behavior,” I might add… in as yet not fully known ways

Same quad, bullet point two, after “accomplish things,” I might add…for good and/or ill

In LR quad, to bullet point one, after “transform,” I might add…in as yet unknown ways.

There. I think I’ve made it better

Most of your suggested edits won’t fit these little boxes ![]()

I like your UL suggestion, but it’s not a topic we really talked about in the actual discussion.

I gave you the second bullet in the UR. At the same time, I think I speak to your appeal to uncertainty in that third bullet.

Also I had the wrong bullet in the LL. Fixed.

Thanks for taking a look!

Definitely better, I think. I like the fix in the LL, and also your responsiveness to feedback! Thanks.

I just hope these are useful. They take a good amount of time to create, but I am hoping that it makes our content experience more satisfying, helps folks better retain the major takeaways from our discussions, and helps us bring more visibility to the insights and wisdoms that are coming through our content, while making those insights a little bit easier to communicate.

Ah -ha!

This is the crux of the matter. What is the “truth” about the nature of our existence?

How much of this “generic” vs “personal” have humans merely been hallucinating for millennia and how much is “real”? Or, “is there any objective reality at all or is everything subjective?” vs “Do I even objectively exist at all?”

I would say that perhaps this new hallucination onto “AI” is the same discussion that has been going on for 30,000 years as early humans stared at their fire their caves for hours every night and achieved altered states and invented the concept of Gods. The question is as old as human consciousness itself - and has never actually been proven conclusively on any side. This modern technological aspect of this topic is just a new group of people joining the discussion. Polytheism is dead, Monotheism is dead, various other -isms (Gods of another name) are dead or dying. People want to believe (I judge desperately) that there is something else “out there”. Gods, Aliens (science fiction) and now AI. Humanity is lonely I guess. This new AI makes people want to think “Hey - we are doing something new!” but (sad lol) - it’s the same old questions projected onto a new manifestation of “The Gods”.

This universal yearning of humans to connect to an “other” has been explained in several ancient traditions. Kabbalism comes most clearly to my mind right now, but there is similar concepts with different terminology in many traditions. Most of the time these ideas were not to be presented to the masses, but only to the more advanced or secret groups. I think only since the 1990’s has there been a widespread dissemination of these ideas.

I find them useful, but then I love maps, even paper ones. They also take a good amount of time to absorb; I find myself returning to them again and again for deeper understanding of what’s being communicated. Like most of Integral content, they require something of a studious mind; there is a lot of complex information condensed into those “little boxes.” The whole map is aesthetically pleasing in design, so I like it on that level too.

I find it interesting that the ChatGPTs are called “large language models.” That terminology lends itself to a (probably) unintentional and indirect and somewhat back-handed suggestion we humans need to pay attention to ‘large language.’ Words and concepts that are BIG, like consciousness, like transformation, like reality, and like intelligence itself. When I come across these words here in relation to AI, I at times feel a little energetic stumble in myself, a tripping-over or hesitation, a pause–it’s sometimes like I’ve never even seen those words before. So I sense a little trickster-spirit in the jolting/shocking emergence of AI, saying “wake up, wake up!” And “let go attachments to what you thought you knew.” The word ‘transformation’ for instance has different meanings in different disciplines. Here at Integral, we tend to sort of automatically think of it in the developmental sense and give it a positive connotation. But the word isn’t limited to that particular meaning or connotation. This may be one of the unintended by-products of AI, encouraging us to really pay attention to words and how we mean them, how we’re using them, what new or expanded definitions might need to be explored.

According to both mythology and archetypal psychology, if one learns the lessons of trickster-energy, we laugh, are delighted. But it usually requires a certain amount of sober-mindedness, seriousness even, and balance (as with polarities) to get there, which your maps are pointing to, I think, and helping with.

One of my favorite authors is George Orwell, not just for the concepts in his books 1984 and Animal Farm and not even The Road to Wiggan Pier. What I appreciate is that he was able to take previously unspoken concepts and bring them into form with plain language that hits you in the gut. I love the word “duckspeak”, for example - which is what ChatGPT starts to sound like after 30 seconds of monologue.

There is a place for language that you feel in the head, but I think I hear it far too much in modern society, especially among much my “conscious” friends and social networks.

There is a speech practice I learned about 20 years ago, I think from Richard Bandler. The idea is to move your attention to your different “chakras” when you speak to get deeper reactions according to your desired wishes.

When people start to drone on in their heads for more than 30 seconds, I just interrupt them. It has to be a pretty complex idea to require more than 30 seconds but what I see is people using 5 minutes to hear themselves say what could be said in 10 seconds. It usually shows to me some kind of deep rooted feeling of insecurity in the speaker, as if the more they say the same thing the more they reinforce their own self image.

I also wonder the degree to which this is cultural. I took a Masters’ Certificate from an Australian University, and at that university the professors gave maximum word limits. We had to express complex Masters level ideas, but using 75% fewer words than in a North American assignment of the same level. In North America, academics learn to bloat their language due to minimum page requirements. Reducing verbiage and long windedness is a skill most North American academics lack. (see what I did there)

So back to AI and the meaning of consciousness - is consciousness only in the head, as much of Western Philosophy seems to think? Will AI ever be able to “speak to the gut”, “speak from the loins” or “speak from the heart”? Are these equally parts of Human consciousness? In order to speak in these ways, humans actually “shut off” their cerebral part of their brains to some degree. I can’t imagine whispering sweet nothings to an AI that is always in full-on cerebral mode.

Is it possible to intellectually learn to “speak from the heart”? I don’t think it is.

I too find that interesting, if only because developmental researchers also use something like “language models” to create their assessments. I am always interested when we start seeing an overlap of terminology from otherwise non-related fields.

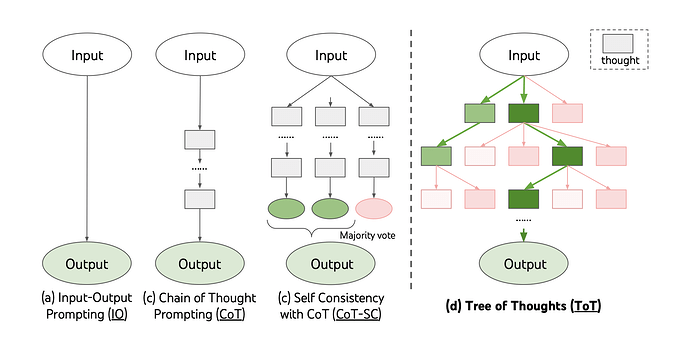

Similarly, there is a new kind of GPT prompting structure that folks are talking about, and I am experimenting with, called the “Tree of Thoughts” framework, which has notions of depth and span (which they call “breadth”) associated with it. As more and more of these overlaps come onto our horizon, it’s not hard to see how Integral could make a potentially large contribution to the field of artificial intelligence, particularly the way it generates, manages, and distributes knowledge.

Another thing I’ve been thinking about recently, is just how strange all this feels, and how much of that strangeness I think can be attributed to an uncanny-valley like effect that is evoked when playing with these technologies. There is a subtle sense of “haunting”, almost, which I think is due to the fact that we are interfacing with these systems using language, our primary intersubjective technology to exchange understanding from one subject to another subject. Language is for subject-subject relationships, not for subject-object relationships. We don’t talk to dead matter — and when we do, we know we are being a bit silly and really just talking to ourselves, because it doesn’t talk back.

GPT, however, does talk back. So when we engage with AI using the intersubjective technology of language, there is a natural expectation that there is another subject somewhere on the other end. And while we cognitively know there isn’t, I think there are likely tens of thousands of years of evolutionary instinct somewhere in our brain stem that is insisting that GPT possesses an actual bonafide subjectivity, or else we would not be capable of interacting with it via language.

As this continues to emerge and AI becomes ever more ubiquitous, will we be living the majority of our lives in an informational space that is constantly evoking this “uncanny valley” feeling? I wonder what that will do to our long term mental health!

I love these questions. For me, it’s almost like asking “can subtle energies be transmitted via gross matter (e.g. electromagnetic patterns in a computer)?”

And I think that, in many ways, it can — for example, we can discern “heart” in a sentence, or in a poem, transmitting through the idle signifiers that we see on the page. It seems to be one of those “it’s not what you say, but how you say it” type deals.

In the main talk, Bruce raises the point that it is hypothesized that being polite to GPT actually produces higher-quality results. And my sense is, there may be something like a subtle-energy pattern making happening here. Perhaps good data is simply more “polite” or more “respectful” than bad data? Maybe including polite terms in your prompt is resulting in higher-quality pattern-making, leading it to higher quality sources and segments in its training data?

I am not sure the mechanism here, but it makes me wonder if perhaps this sort of overall pattern recognition is enough to capture and re-transmit some of the “heart” that exists in ordinary human interactions. It certainly seems capable of simulating more dense forms of subtle transmission — emotional content, for example. And I’ve had some luck getting to apply some transpersonal themes as well (e.g. “identify some useful Witness-state insights from this content transcript.”)

Ultimately though, I think the “embodied knowledge & distributed knowledge” polarity is helpful here, as is the “AI as Other & AI as Extension of Self” polarity. If we regard AI a separate entity, then we might get caught up on concerns about its capacity to “speak from the heart”. However, if we are using it as an extension of our own self, our own creativity, and our own heart, than of course it can help us to transmit those kinds of frequencies, because we’re not looking at AI as a source of heart, but as a vehicle of heart.

Just some random thoughts!

Here the loaded phrase is “higher quality”. This is a subjective judgement based on bias regarding what is “higher”. Sure - people who tend to use 10 words when 3 would suffice will tend to see that as “higher quality” than being blunt, for example. I don’t see that as higher quality, but an affectation of quality. I sense a judgement that more direct speech is lower quality and roundabout speech is higher, which I disagree with.

It is completely possible to plot out on data points “polite” speech. It’s just various linguistic functions that have been analyzed in the realm of linguistics for decades. The issue is - politeness can fall flat on humans if there is a sense that it is not genuine - and how can AI be “genuine”? Some cultures don’t like the question “How is your day going?” The English Native speaker considers it polite, while some non-native speakers might ask “Do you REALLY want to know, or do you want me to just make up an answer?”

What I would be interested in would be listening to an American Chat GPT talking to an Eastern European ChatGPT and then doing psychoanalyses on each other. That would be a hoot. Or two Chat GPT’s trying to convince each other of two opposing perspectives.

I’ve been following online conversations/podcasts with various people, to hear the diversity of thought around AI, and how people fundamentally frame it. One person, a Buddhist monk well-acquainted with AI and some of the creators of it, frames it as “data-ism is religion.” He lays out a pretty good case in the sense of saying religion tells us what is ‘real’, and how we should act towards it, and says data-ism is the preferred religion of the market and the state. He’s not averse to AI, btw, has his own ideas of how to bring wisdom and goodness to AI (through creation of an AI trained on the wisdom and goodness, he says, of spiritual practitioners; a wisdom AI that would then interact with other AI systems to “teach and guide them.”)

Another credentialed person (four years at NASA creating algorithms, among other things) sees AI fundamentally as similar to human magicians who enchant and delight us with their tricks, all of which are based on illusion and deception. Magicians keep their methods hidden, secret, and she sees AI companies doing the same thing, not being transparent about the data sets their products were trained on, for instance, or sharing to the extent that they understand exactly how AI works. She is not totally averse to AI either, but definitely believes there’s too much hype around it.

Another person used Hannah Arendt’s “banality of evil” phrase to describe their fundamental take on AI, tech being so commonplace and all pervasive in society and yet clearly, producing some “dark” effects (along with good and mediocre). Duty vs. conscience is a central core of the argument, with people saying that using tech, including AI probably in the near-future, is contrived as a duty in order to be able to participate in society, with more and more institutions and companies requiring it, and that this duty will override some people’s moral and ethical senses and personal responsibility.

So the fundamental takes on it are varied, and it’s interesting to see the archetypes and projections coming up. I myself do think it’s a big step in the evolution of technology, which is to say, a step in the evolution of (call it) intelligence of the particular humans who created it. Whether it will be an evolutionary step for humanity as a whole in terms of goodness, truth, and beauty is yet to be seen. Its emergence has been compared to the profound significance of the invention of the steam engine, and to the advent of electricity in terms of cultural/societal change. But it has also been compared to the profound significance of the invention of the nuclear bomb, and the nuclear age in general. So there’s that.

I wonder if a dumbing down of at least some humans might occur. One podcast I listened to talked about studies done around GPS in vehicles, which have shown that people who used GPS 100 times to go from here to there were unable to then make the same trip on their own, using their own sense of orientation and direction, memory, recognition of landmarks, etc. Perhaps atrophy or a laziness sets in in certain parts of the brain when there is total reliance on an external device to function in particular ways for us.

As for the people creating AI models and programs, I can imagine them having some “God-like” experiences. I can imagine them looking at, being mesmerized, even awed by the computing power, by how rapidly processes happen, what is produced by the models. I can imagine them entering states of consciousness outside of space and time, being entranced, being in subtle states, being so raptly attentive, caught up, that they temporarily lose the sense of self, of separateness, and perhaps experience a merging with the object they’re ‘meditating’ on, and have an experience of non-duality, where the machine/computer/its workings and they are one. I can easily imagine that. Whether or not they call this God, I don’t know.

That’s an interesting (and potent) practice; I’ve used it too.

Yep.

If human consciousness is defined (and this is only one definition of a big concept) as the first person subjective experience of knowing that “I exist,” then yes, the gut and loins and heart etc are part of it. I don’t know what AI will be able to do in the future. We know that older forms of AI are able to sense energy: heat sensors, motion sensors, ultrasounds etc. I don’t know exactly how they do that, but they do. So who knows what might come next?

Have you watched the videos with “the godfather of AI,” Geoffrey Hinton? He worked on AI at Google for 40 years and is now dedicated to warning about its dangers. He says, if I understood correctly, that the “Superintelligence” we’ve yet to see the full explosion of has “human intuition.” (Forgive me if you’ve already tuned into him.) He uses the example of “man woman cat dog,” saying if humans were asked to group all cats as either man or woman and all dogs as either man or woman, humans name cats as woman and dogs as man. The Superintelligence does the same thing, and it is not based on large language learning. So hmmm…who knows where this is all going? (And it might be argued that “intuition” is not exactly the right word or concept, but that’s the word he used.)

No. It takes practice.

Not yet, but I will do a search. One person who had a slightly similar idea was Terrence McKenna way back before this became trendy. He basically said that if true AI ever came about, the first thing it would do would be to hide its existence from humans. He also brought up the concept of mushrooms being both conscious and intelligent - but the intelligence is so foreign to us that we cannot even recognize it with our intellect - until we take high doses of psychedelics.

This leads to the problem that humans can only form opinions on what they can understand, and science can only understand what it can measure. What is required is more intuitive scientific methods, which allow science to come up with the man-woman-cat-dog conclusions.

I’ll give an example. A friend of mine was selling that jewelry that supposedly resonates with a frequency that is more “grounding”, turning a $3 bracelet into a $30 and up bracelet. Yes, the claims are dubious scientifically, as with claims about crystals and all the other things in the mystical genre. But hundreds of thousands of years of experience has engrained into humans that there is something about these things that cannot simply be explained away that science doesn’t support it. Science says it isn’t in our genetics (because they can’t measure it). But every human can access this universal … idk … experience. One of my favorite examples of this is data that shows variations of human events with the phases of the moon, which “science” denies but the data is there waiting for an explanation.

For sure, if we consider different “intelligences” (Gardner) - some people are using some of them less and less. I think this also produces increasing imbalance in the spheres of the brain and the masses are getting more and more “lopsided”. I think we have to go out of our way and actively reject some (perhaps most) aspects of modernity in order to maintain a balanced mind / body.

This is what I see in the AI dialogue when in the hands of the IT industry, particularly when they start talking about “consciousness”. Just the name consciousness is a deception, because AI proponents flip flop on what consciousness means almost as if they don’t understand the terminology they are using. I hear them making claims about what might be called “big consciousness” to make a big story and impress the masses - but when you try to pin them down and have a discussion about that, they start describing what I’d call “small consciousness”, and then when I try to pin them down on that, all we are left with is “fast logic”. When I look at the claims of consciousness because when you speak nicely to AI, it elevates the discussion - when I boil it down we are just talking about polite verbiage, which has been mapped out by linguists since the 1960’s as a data set and we are merely seeing an acceleration of the speed at which this data set adapts when presented with similar data sets.

What I would like to see in an AI that would suggest actual independent intelligence would be an AI that responds “why are you asking me such a stupid question? Can’t you figure that out yourself?” LOL Or even say it more politely but the point is to actually have it’s own opinion about the person asking the question and an ability have an opinion on how it should answer.

As an example, let’s go to religion, with the more expansive meaning of religion as:

What comes to mind is Eastern traditions such an Martial Arts masters, Zen Masters, Yogis, Gurus, and so forth - who often respond in ways that re incomprehensible to the initiate but through a process later becomes clearly wise. In pop culture we have Mr. Miyagi telling Daniel San to “Sand the floor”, which Daniel though had one purpose but later we found had a deeper purpose. It would be interesting to see if AI could handle these more intuitive data sets, most of them requiring personal experience to understand the WHY of doing them and that personal experience of doing is something the AI would lack, thus being incapable of truly understanding its own teachings.

What I see is that our concept of how things “are” allows tech to conceal what our personal responsibility actually is, so modern humans are by and large mostly ignorant of what their responsibilities should be to themselves. The first step in reversing this is to pull the curtain to show that OZ is just a lost old man, and then click the heels of our ruby slippers and return home, lol.

Is the Tree of Thoughts particular to GPT4 and later versions, or separate app you integrate with GPT?

I certainly hope Integral can contribute something.

I’ve been thinking about the Human Genome Project which aroused so much excitement when it started in 1990, but also many ethical, legal, and social questions as to its implications. From the very beginning, the HGP allocated 5% of its annual budget (and still was a couple of years ago) to researching/addressing these issues, which was instrumental in the US passing the HIPPA in 1996. AI Safety research, on the other hand, from what I’ve read, accounts for only about 2% of all AI research, and 99% of dollars are spent on further development, with 1% of monies spent going to safety and ethical issues.

While genomics have played a role in various fields, and were instrumental in tracking changes in the SARS Covid virus and in creating testing for Covid-19 and in the development of vaccines, major problems during the pandemic were related to public messaging and polarization around masking, vaccines, lockdowns and such. In other words, human communication and perspectival attitudes and behavior. That is going to be a problem with AI as well, so perhaps there is a role for Integral. I would think, with so many AI experts issuing warnings of existential threat/risk and asking for regulation, that some companies might be open to hearing some novel ideas.

I always appreciated Freud’s elaborations on the uncanny, that sense of anxiety and strangeness we can feel when the familiar and the alien are present at once. I think Trumpism, MAGA, QAnon have prepared us a little bit for the uncanny; think of the neighbor or relative or friend who’s espousing far-out conspiracy theories… But yes, what effect will that have on some people’s mental health? And then if superhybridintelligence comes on the scene in the next decade, wow.

unless of course it’s spirit-possessed  an idea no more far-fetched than others we’re talking about. “There are more things in Heaven and Earth, Horatio, than are dreampt of in your philosophy.”

an idea no more far-fetched than others we’re talking about. “There are more things in Heaven and Earth, Horatio, than are dreampt of in your philosophy.”

My discussion with @LaWanna brings to mind a reply to this:

I already have several “magic rocks” that serve this purpose.

This has nothing to do with AI per se. What we are talking about here is voodoo, witchcraft, Idol worship, and a thousand other names. This ancient practice is basically using a thing (object, chant, mandalla, and now AI) to, let’s say … pretend … in a way that will lead to transformation.

So yeah, if you pour goats milk over your AI’s CPU while chanting “Ohm Nama Shivaya” 108 times a day for 90 days, the AI will facilitate transformation of those who approach it, as it will then be a “consecrated” AI and will no longer will need to answer in words, but it’s mere presence will create a transformation. (and the goats milk probably fried the circuitry).

I am joking a little here, but on a serious note this is what people have been doing for millennia with inanimate objects (and themselves) so I don’t see what is unique or different with pretending AI can do it.

I think Paul Stamets espouses this as well about mushrooms. There is research that shows that plants do have a bit of sentience; they respond to human emotion and also have a response to being cut, pruned. Even water has been shown in a few studies to respond to human consciousness, e.g. pollutants transmuted. And of course, rain dances and other shamanizing towards changing the weather are possibly more of the same.

Yes.

This sounds somewhat related to the theory of consciousness called the Theory of Mind. With this theory, consciousness is present in a being/person/animal/entity if they can see another person’s perspective and see that it is different from their own perspective (or the same). It reminds me of the Zulu concept of Ubuntu: “I am because we are.” In Integral terms, somewhat related to the you/we 2nd person perspective.

Another archetype/projection related to AI, is the “double-edged sword.” It’s being applied to labor, ie. some jobs will be lost, but others created. But it’s also general enough to be applicable to other aspects of AI as well.

It’s more like a prompting strategy, where you get GPT to produce multiple variations, and then to “reflect” on those variations by having it rate them based on a certain set of parameters. Which basically becomes something like a brute-force effort to simulate holistic thinking (such as the crossword problem, where a suggested solution for a clue changes as soon as another clue is solved). Here’s a nifty graphic from the paper to help make sense of the logic:

unless of course it’s spirit-possessed

an idea no more far-fetched than others we’re talking about.

I certainly cannot eliminate the possibility! And it’s fun to think about, for sure. But to me it feels like the AI “brain” is currently composed of any number of neural circuits, but is not yet “alive” as a self-sustaining, self-motivated system. Then again, from the perspective of a single cell in our body, perhaps the human nervous system would look similar, while producing a higher-stage felt interiority that no cell would ever be able to discern from that level of scale.

That said, it’s an important question to keep in the back of our mind, because there would be massive ethical consequences if we somehow discovered that there was some form of interior experience inside the machine.

I think I meant something different than “extension of the self”  I am not talking about how we project consciousness into nonliving objects, which yes, I agree, is essentially a form of animism.

I am not talking about how we project consciousness into nonliving objects, which yes, I agree, is essentially a form of animism.

I’m thinking more in terms of a distributed intelligence, which our own embodied intelligence depends upon. I don’t project consciousness into my calculator, but I definitely recognize a calculator as being a part of my own distributed intelligence, allowing me to “offload” certain cognitive tasks so I can focus on other tasks that require some degree of embodied human intelligence. In which case, we are not looking at AI as an “entity” but as yet another in a long series of informational systems, each of which has completely restructured our society. I already mentioned this point earlier, so I will just copy/paste that paragraph:

It is surreal having a 10 year old daughter as this stuff starts to hit the mainstream, and trying to imagine what her future will look like as society once again begins to autopoietically reorganize itself, just as it’s done for every prior communications paradigm. (The emergence of language at Crimson allowed neolithic magenta societies to emerge, the emergence of writing later allowed Amber to emerge, the emergence of the printing press allowed an eventual proliferation of literacy that planted the seeds for Orange to emerge, the emergence of electronic media like radio, TV, and film later allowed the Green stage to emerge, the emergence of internet technologies is allowing Teal to coalesce and cohere, etc. At every step of the way, new communications systems generate new forms of distributed intelligence, which allow society to reorganize in completely new ways as individuals in that society have access to new methods and resources for generating knowledge. AI is very much part of this ongoing legacy, and the effect it has on global society will be profound, I believe.)

All of which is to say, individual embodied intelligence is simultaneously lifted and limited by our distributed Intelligence. For example, orange rational individuals certainly existed in ancient cultures like, say, Ancient Egypt. It takes a bit of rationality in order to organize a massive public works program and build something like a pyramid. However, there were very few artifacts generated that were imprinted with this orange altitude, and therefore no rational “distributed intelligence” that would allow others to more easily grow to that stage, and therefore the rationality that did exist then was 100% translated according to the magic/mythic stage of the overall social discourse (and distributed intelligence) of the time.

For us in the 21st century, we have a massive, global-scale distributed intelligence — including books and media artifacts and education systems and communication technologies and so forth. None of these would be something that we recognize as an “entity”, nor are they things we need to project consciousness into in order to recognize them as extensions of ourselves, a field of distributed intelligence within which our individual embodied intelligence can grow.

A fascinating conversation! It reminds me of one that emerged in 1956. A conference at Dartmouth launched AI. The following conversation anticipated AGI in the next decade. The reality was a “winter of AI” that lasted until the late '70s. The limitations were largely cost and technical.

Since last November the LLMs have supported an explosion of limited applications and I welcome Integral’s work to explore evolutionary possibilities.

However, the employment, military and regulatory impacts may dominate. This ia already happening in robotics. While I don’t expect a winter, economic and political issues should be anticipated.

Glenn Bacon